In particular, the great replacement of jobs has doubled down on low-skilled and entry-level workers. This is a very large percentage of the overall workforce and a training ground for young workers. Technocrats promoting robotics are unconcerned about societal effects. ⁃ TN Editor

Google’s artificial intelligence lab published a new paper explaining the development of the “first-of-its-kind” vision-language-action (VLA) model that learns from scrapping the internet and other data to allow robots to understand plain language commands from humans while navigating environments like the robot from the Dinsey movie Wall-E or the robot from the late 1990s flick Bicentennial Man.

“For decades, when people have imagined the distant future, they’ve almost always included a starring role for robots,” Vincent Vanhoucke, the head of robotics for Google DeepMind, wrote in a blog post.

Do you recall the 1999 sci-fi comedy-drama film featuring Robin Williams, titled Bicentennial Man?

Vanhoucke continued, “Robots have been cast as dependable, helpful and even charming. Yet across those same decades, the technology has remained elusive — stuck in the imagined realm of science fiction.”

Until now…

DeepMind introduced the Robotics Transformer 2 (RT-2), which utilizes a VLA model that learns from the web and robotics data and translates this knowledge into understanding its environment and human commands.

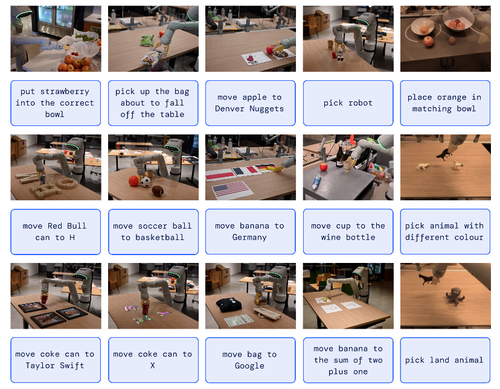

Previously, training robots to perform simple tasks, such as throwing away trash or cooking french fries, have been achieved. But a whole new upgrade in intelligence has arrived by robots being able to perform these tasks below:

“Unlike chatbots, robots need “grounding” in the real world and their abilities. Their training isn’t just about, say, learning everything there is to know about an apple: how it grows, its physical properties, or even that one purportedly landed on Sir Isaac Newton’s head. A robot needs to be able to recognize an apple in context, distinguish it from a red ball, understand what it looks like, and most importantly, know how to pick it up,” Vanhoucke noted.

The critical understanding is that robots are about to get much more intelligent than ever, with just enough brains to replace humans in low-skill jobs. In March, Goldman told clients that robotization of the service sector would translate to millions of job losses in the years ahead.

Read full story here…